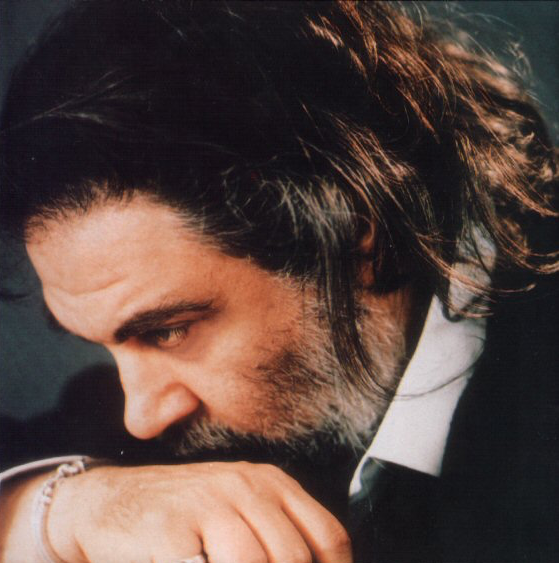

Yet another masterpiece from Tuncay Talayman, simply and humbly one of the most gifted talents in the video game industry. This time he portraits me as a GPU burner sorcerer, which is literally true.

Thank you very much indeed, Tuncay!

Hi there!

Nowadays, my master slave is over-busy with developing something called “The Occult 2024”. Besides feeding and petting me twice a day, I’m afraid he has no spare time for writing new articles on this blog. So, I decided to take advantage of this situation by taking possession of his blog and reminding you something of great importance (!) on behalf of my brothers and sisters… Mark my words! Twice is NOT enough. Cats –especially me– should be fed, petted and tickled behind the ears at least thrice a day… I mean it!

Yours Truly,

Karaböcük

“Innovation Engineering” is a great influence on the growth and survival of today’s world. As a method for solving technology and business problems to innovate, adapt, and enter new markets using the expertise in emerging technologies, it is an essential tool for creative minds.

Much like every other industry in the world, video game development industry is open to new ideas and products more than ever. Actually, it has never been more critical. New technologies emerge, as do new business models. Thanks to innovation culture, video game development process has become more than classic software engineering by evolving into modern innovation engineering.

The latest release of The Occult is a meticulous combination of software and innovation engineering. In order to maximize productivity and efficiency, outside the box thinking was vital. I had to come up with creative and unorthodox problem solving methods. So, rather than waiting for a-ha! moments, I relied on cross-disciplinary research and practices for creativity, and classic engineering methodology for productivity; simply harnessing the best of both worlds… And it worked, charmingly!

![]()

The Occult version 2022.2 is released!

The Occult, a virtual gameplay programming ecosystem for Unreal Engine, is now available for both Intel and ARM architectures by offering improved cross-platform compatibility, exciting new features, enhancements, and a few bug fixes. As usual, all commercial and personal video game development projects that I am currently involved in will benefit from the new/enhanced features available in this release.

New Architecture

Multiprocessing System Architecture: User-defined number of virtual processors can perform sync/async operations at the same time. Multiprocessing read/write operations on shared code/memory are 100% thread-safe at both virtual and physical access level.

Multiple Stacks for Multiple Processors: A private stack is assigned for each vCPU, and a public stack is shared among all processors. The public stack is mainly used for level-scope variables, and can be accessed via Index/Data bus. Contrary to the public stack, private stacks do not use Occult’s bus architecture anymore. They are hardwired to parent vCPUs for exceptional low-latency access!

Virtual/Native Code Switching: Instant code switching at instruction-precision level of detail creates an opportunity to inject native C++ code into virtual Assembly code and vice versa, while creating endless possibilities for hardcore code optimization.

New Features

Enhancements

Improved Game Executable Compatibility

Improved C++ Code Compatibility

Improved C++ Development Environment Compatibility

Bug Fixes

Credo quia absurdum!

Credo quia absurdum!

In terms of productivity, efficiency, and profitability, coding via versatile range of “cross-platform” tools is a must for 21st century video game developer.

However, regarding Unreal Engine’s cross-platform features, debugging, fine-tuning and optimizing game code simultaneously on multiple platforms can sometimes be a real headache even for veteran developers. It is a complex, time-consuming and error-prone task by its very nature. And, this is where The Occult’s latest release comes into play…

![]()

The Occult version 2022.1 is released!

I am truly excited to announce the latest release of The Occult. It is now available for both Intel and ARM architectures by offering improved platform compatibility, exciting new features, various enhancements, and a few bug fixes. As of today, all commercial and personal video game development projects that I am currently involved in will benefit from the new/enhanced features available in this release.

Enhancements

Improved Game Executable Compatibility

Improved C++ Code Compatibility

Improved C++ Development Environment Compatibility

Bug Fixes

Vivre libre ou mourir!

Vivre libre ou mourir!

A Synchronous/Asynchronous Virtual Machine Architecture

for Unreal Engine

Mert Börü

Video Game Developer

mertboru@gmail.com

Abstract

The “Occult” virtual machine architecture is a cross-platform C++ middleware designed and implemented for Unreal Engine. The primary feature of the Occult is to deliver super-optimized AAA grade games. By adding a very thin-layer on top of Unreal Engine C++ API, the Occult provides a virtual microcomputer architecture with a synchronous/asynchronous 64-bit CPU, various task specific 32-bit coprocessors, and a modern assembly language gameplay programming ecosystem for handling real-time memory/asset management and in-game abstract object/data streaming. It can be used as a standalone solution or as a supplementary tool for existing Blueprint/C++ projects.

(Cover Illustration: “Inferno”, Canti XXVI-XXVIII

by Federico Zuccari – © Uffizi collection)

For the 700th anniversary of the death of Dante, Uffizi Gallery (Florence, Italy) curates a virtual exhibition with 88 rarely displayed drawings of “The Divine Comedy”, as a part of the nationwide celebrations.

The mediaeval poet and philosopher Dante Alighieri (1265-1321), known as the Father of the Italian language, is credited with making literature accessible to the public for using common Tuscan dialect, instead of Latin, as well as paving the way for important Italian writers such as Petrarch and Boccaccio.

“The path to Paradise begins in Hell.” – Dante

Dante’s masterpiece, “The Divine Comedy”, is an epic poem in three parts recounting a pilgrim’s travels through Hell, Purgatory and Heaven. As an allegory, the poem represents the journey of the soul toward God, with the Inferno describing the recognition and rejection of sin. The message in Dante’s Inferno is that human beings are subject to temptation and commit sins, leaving no escape from the eternal punishments of Hell. However, human beings have free will, and they can make choices to avoid temptation and sin, ultimately earning the eternal rewards of Heaven.

“To rebehold the stars”

To mark the 700th anniversary of the Italian poet’s death in 2021, Uffizi Gallery is providing Dante-centric artworks online “for free, any hour of the day, for everyone” says Uffizi director Eike Schmidt. He states that these drawings are a “great resource” for Dante scholars and students, as well as “anyone who likes to be inspired by Dante’s pursuit of knowledge and virtue.”

The virtual exhibition consists of 88 high-resolution images of works by the 16th-Century Renaissance artist Federico Zuccari. The pencil-and-ink drawings are in contrasting shades of black and red, which were originally bound in a volume with each illustration opposite the corresponding verse in Dante’s epic poem. They were completed during Zuccari’s stay in Spain from 1586 to 1588, and became part of the Uffizi collection in 1738.

Dante Illustrated links:

This event is part of the nationwide celebrations for the 700th anniversary of the death of Dante Alighieri, but also aims to symbolize the rebirth of Italy and the art world.

(Cover Photo: © www.yukokusamurai.com)

When I appreciate ‘the moment’, happiness follows. Happiness is often in the little things, and year 2018 has offered me a bunch of them. Sincerely thankful and grateful for all the little things I have been given this year… Now is the time to cherish the ‘moments of joy’ by sharing a few snapshots, in no particular order.

![]()

Unreal Fest Europe 2018

A three day event designed exclusively for game creators using Unreal Engine, with speakers drawn from Epic, platform owners and some of the leading development studios in Europe took place in Berlin, April 24-27. Such a great opportunity for meeting old friends, and making new ones. – Thank you Epic!

© 2018 – All event photos by Saskia Uppenkamp

![]()

A Visit to NERD

It is no secret that Nintendo is using Unreal Engine 4 for their current and upcoming line of Switch games. As an Unreal Engine developer, I had the privilege of visiting Nintendo European Research & Development (NERD) in Paris for a 1-on-1 meeting. Due to usual Nintendo regulations, I’m not allowed to share any kind of information about the top-notch engineering stuff that I had witnessed, but that can’t prevent me from telling you how much I was impressed. All I can say is “WOW!” 😉

I have great admiration and respect for Japanese business culture, which is genuinely represented in Paris. Thank you very much for your kind hospitality!

![]()

IndieCade Europe 2018

IndieCade continues to support the development of independent games by organizing a series of international events to promote the future of indie games. This year, we had the 3rd installment of European organization, and it is getting better and bigger each year. I love the indie spirit. No matter how experienced you are, we always have new things to learn from each other.

From my perspective, the most iconic moment of the event was meeting and chatting with Japanese game developer Hidetaka Suehiro (aka “Swery65”), the designer of The Last Blade (1997) and The Last Blade 2 (1998). Both games were released by SNK for Neo Geo MVS – my all time favourite 2D console.

So, guess what we talked about… Fighting games? No… Neo Geo? No… Game design? No… Believe it or not, our main topic was “best hookah (water pipe) cafés in Istanbul”. I’m simply amazed to discover that he knows Istanbul better than me. Swery65 is full of surprises!

![]()

mini-RAAT Meetings @ MakerEvi

Try to imagine an unscheduled last-minute “members only” meeting, hosting crème de la crème IT professionals ranging from ex-Microsoft engineers to gifted video game artists, acclaimed musicians, network specialists, and many other out of this world talents, in addition to a bunch of academicians with hell of titles and degrees! So, what on earth is the common denominator that brings these gentlemen together, at least once or twice a year? Retrocomputing, for sure… Bundled with fun, laughter and joy! 🙂

© 2018 – All event photos by Alcofribas

Special thanks to our host, MakerEvi – a professional ‘Maker Movement Lab’ dedicated to contemporary DIY culture, fueled by the artisan spirit and kind hospitality of The Gürevins. An exceptional blend of local perspective and global presence.

![]()

Dila’s Graduation

This year, my dear daughter has graduated from Collège Sainte Pulchérie YN2000 with DELF B1 level French diploma, a compulsory certificate to follow studies in the French higher education system. Being a hardworking student, she has passed national high school entrance exam, and is currently attending Lycée Français Saint-Michel. – “I am proud of you… Bonne chance, ma chérie!”

“The bond that links your true family is not one of blood,

but of respect and joy in each other’s life.”

– Richard Bach

Family is a ‘sanctuary’ for the individual. If we are blessed enough to have a loving, happy, and peaceful family, we should be grateful every day for it. It is where we learn to feel the value of being part of something greater than ourselves. Love is a powerful thing; we just have to be open to it.

![]()

Life is a Celebration

For all the moments I have enjoyed and to all my dear friends & members of my family who made those meaningful moments possible, I would like to propose a toast. Would you like to join me for a glass of absinthe, so that we keep on chasing our ‘green fairies’ together and forever? 😉

![]()