After 17 years of having a yearning desire to visit the Musée des Arts et Métiers (Paris) once again, I have finally managed to arrange an opportunity for a second encounter. This time, with my family!

If I were to summarize what Musée des Arts et Métiers has always meant to me, it would simply be the fact that it is a Chapel for Arts and Crafts that houses marvels of the Enlightenment. Something more than an ordinary science museum; a temple of science, actually. During my first visit in 1999, I have noticed that the Chapel has sculpted my heart and mind in an irreversible way leading to a more open-minded vision. It has certainly been an initiation ceremony for a tech guy like me!

Founded in 1794 by Henri Grégoire, the Conservatoire National des Arts et Métiers, “a store of new and useful inventions”, is a museum of technological innovation. An extraordinary place where science meets faith. Not a religious faith for sure; a faith in contributing to the betterment of society through Science. Founded by anti-clerical French revolutionaries to celebrate the glory of science, it is no small irony that the museum is partially housed in the former Abbey Church of Saint Martin des Champs.

“… an omnibus beneath the gothic vault of a church!”

The museum is HUGE! Scattered across 3 floors, I assure you that at the end of the day dizziness awaits you, thanks to the mind-blowing 2.400 inventions exhibited. An aeroplane suspended in mid-flight above a monumental staircase, automatons springing to life in a dimly lit theatre, an omnibus beneath the gothic vault of a church, and a Sinclair ZX Spectrum… These are just a few of the sights and surprises that make The Musée des Arts et Métiers one of Paris’ most unforgettable experiences.

A picture is worth a thousand words. So, let’s catch a glimpse of the museum through a bunch of photos that we took…

“You enter and are stunned by a conspiracy in which the sublime universe of heavenly ogives and the chthonian world of gas guzzlers are juxtaposed.” – (Umberto Eco, Foucault’s Pendulum, 1988)

![]()

Ader Avion III – Steampunk bat plane!

On October 9, 1890 a strange flying machine, christened ‘Avion no.3’, took off for a few dozen meters from a property at Armainvilliers. The success of this trial, witnessed by only a handful of people, won Clément Ader -the machine’s inventor- a grant from the French Ministry of War to pursue his research. Further tests were carried out on the Avion no.3 on October 14, 1897 in windy overcast weather. The aircraft took off intermittently over a distance of 300 meters, then suddenly swerved and crashed. The ministry withdrew its funding and Ader was forced to abandon his aeronautical experiments, despite being the first to understand aviation’s military importance. He eventually donated his machine to the Conservatoire in 1903.

Like his earlier ‘Ader Éole’, Avion no.3 was the result of the engineer’s study of the flight and morphology of chiropteras (bats), and his meticulous choice of materials to lighten its structure (unmanned it weighs only 250 kg) and improve its bearing capacity. Its boiler supplied two 20-horsepower steam engines driving four-bladed propellers that resembled gigantic quill feathers. The pilot was provided with foot pedals to control both the rudder and the rear wheels… – A steam-powered bat plane that really flew!

![]()

Cray-2 Supercomputer

The Cray-2, designed by American engineer Seymour Cray, was the most powerful computer in the world when it mas first marketed in 1985. A year after the Russian ‘M-13’, it was the second computer to break the gigaflop (a billion operations per second) barrier.

It used the vector processing principle, via which a single instruction prompts a cascade of calculations carried out simultaneously by several processors. Its very compact C-shaped architecture minimized distances between components and increased calculation speed. To dissipate the heat produced by its hundreds of thousands of microchips, the ensemble was bathed in a heat conducting and insulating liquid cooled by water.

The Cray-2 was ideal for major scientific calculation centres, particularly in meteorology and fluid dynamics. It was also notable for being the first supercomputer to run “mainstream” software, thanks to UniCOS, a Unix System V derivative with some BSD features. The one exhibited at the museum was used by the École Polytechnique in Paris from 1985 to 1993.

(For more information, you can check the original Cray-2 brochure in PDF format.)

![]()

IBM 7101 CPU Maintenance Console

In 1961, IBM 7101 Central Processing Unit Maintenance Console enabled detection of CPU malfunctions. It provided visual indications for monitoring control lines and following data flow. Switches and keys on the console allowed the operator to simulate automatic operation manually. These operations were simulated at machine speeds or, in most cases, at a single step rate. – In plain English: A hardware debugger!

![]()

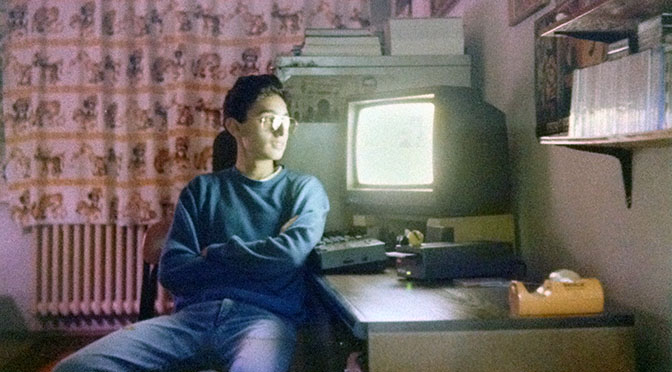

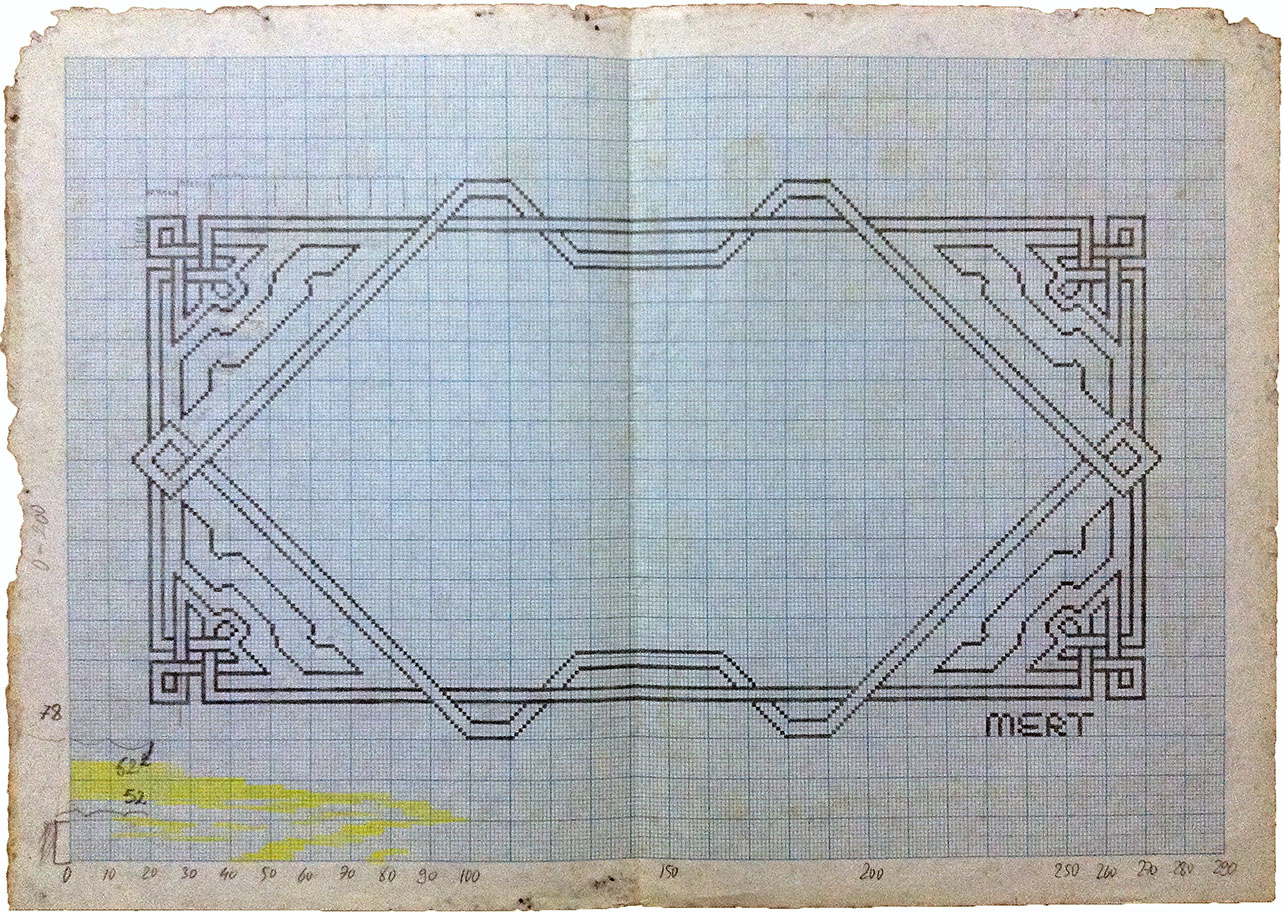

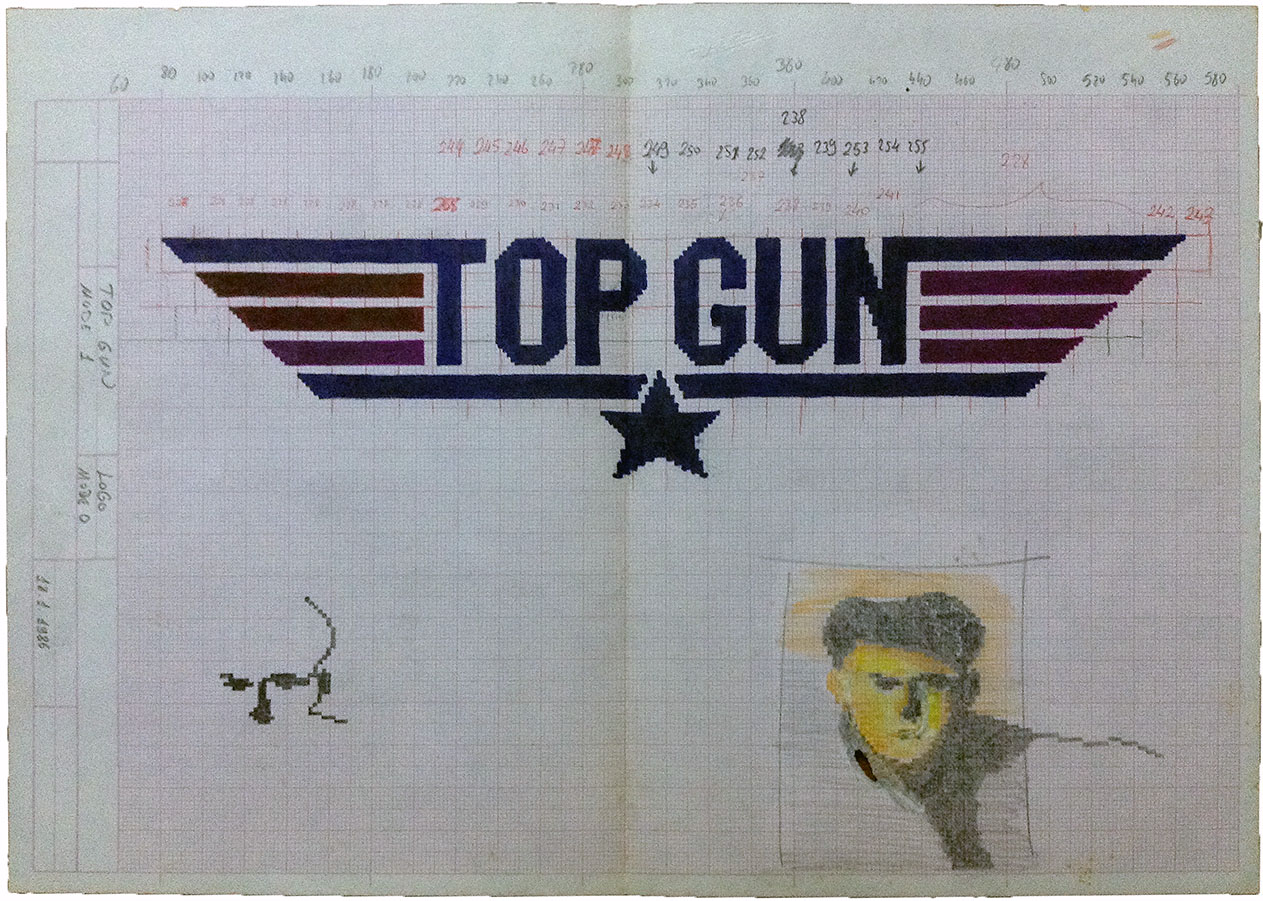

A salute to the 8-bit warriors!

My first love: a Sinclair ZX81 home computer (second row, far right) with a hefty 1024 bytes of memory and membrane buttons, beside the original Sinclair ZX Spectrum with rubber keyboard, diminutive size and distinctive rainbow motif… I feel like I belong to that showcase! Reserve some space for me boys, will you? 😉

The most interesting items in the retro computer section are the Thomson TO7/70 (third row, far left) and Thomson MO5 (third row, in the middle) microcomputers. Both models were chosen to equip schools as part of the ‘computers for all’ plan implemented by the French government in 1985 to encourage the use of computers in education and support the French computer industry, just like what the British government had done with BBC microcomputers. The Thomson TO7/70 was the flagship model. It had the ‘TO’ (télé-ordinateur) prefix because it could be connected to a television set via SCART plug, so that a dedicated computer monitor was not necessary. It also had a light pen that allowed interaction with software directly on the screen, as well as a built-in cassette player for reading/recording programmes written in BASIC.

![]()

Camera Obscura

From an optical standpoint, the camera obscura is a simple device which requires only a converging lens and a viewing screen at opposite ends of a darkened chamber or box. It is essentially a photographic camera without the light-sensitive film or plate.

The first record of the camera obscura principle goes back to Ancient Greece, when Aristotle noticed how light passing through a small hole into a darkened room produces an image on the wall opposite, during a partial eclipse of the sun. In the 10th Century, the Arabian scholar Ibn al-Haytham used the camera obscura to demonstrate how light travels in straight lines. In the 13th Century, the camera obscura was used by astronomers to view the sun. After the 16th Century, camera obscuras became an invaluable aid to artists who used them to create drawings with perfect perspective and accurate detail. Portable camera obscuras were made for this purpose. Various painters have employed the device, the best-known being Canaletto, whose own camera obscura survives in the Correr Museum in Venice. The English portrait painter Sir Joshua Reynolds also owned one. And -arguably-, Vermeer was also on the list of owners.

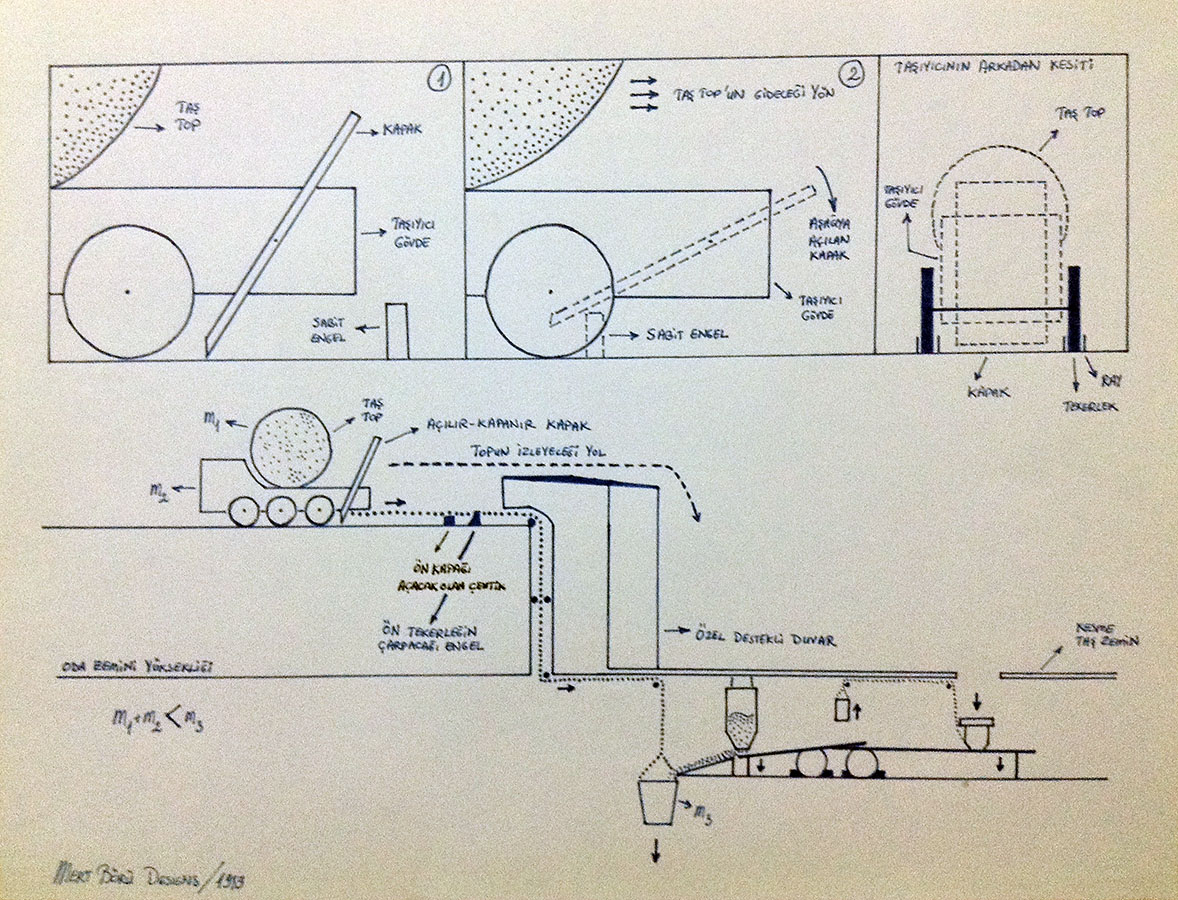

“… an invaluable tool for video game development”

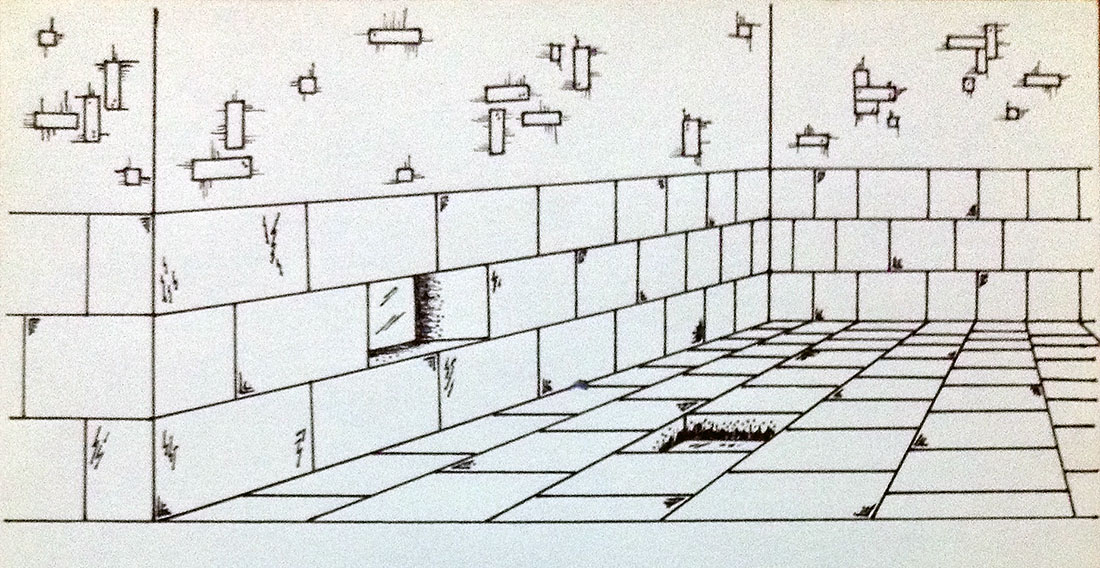

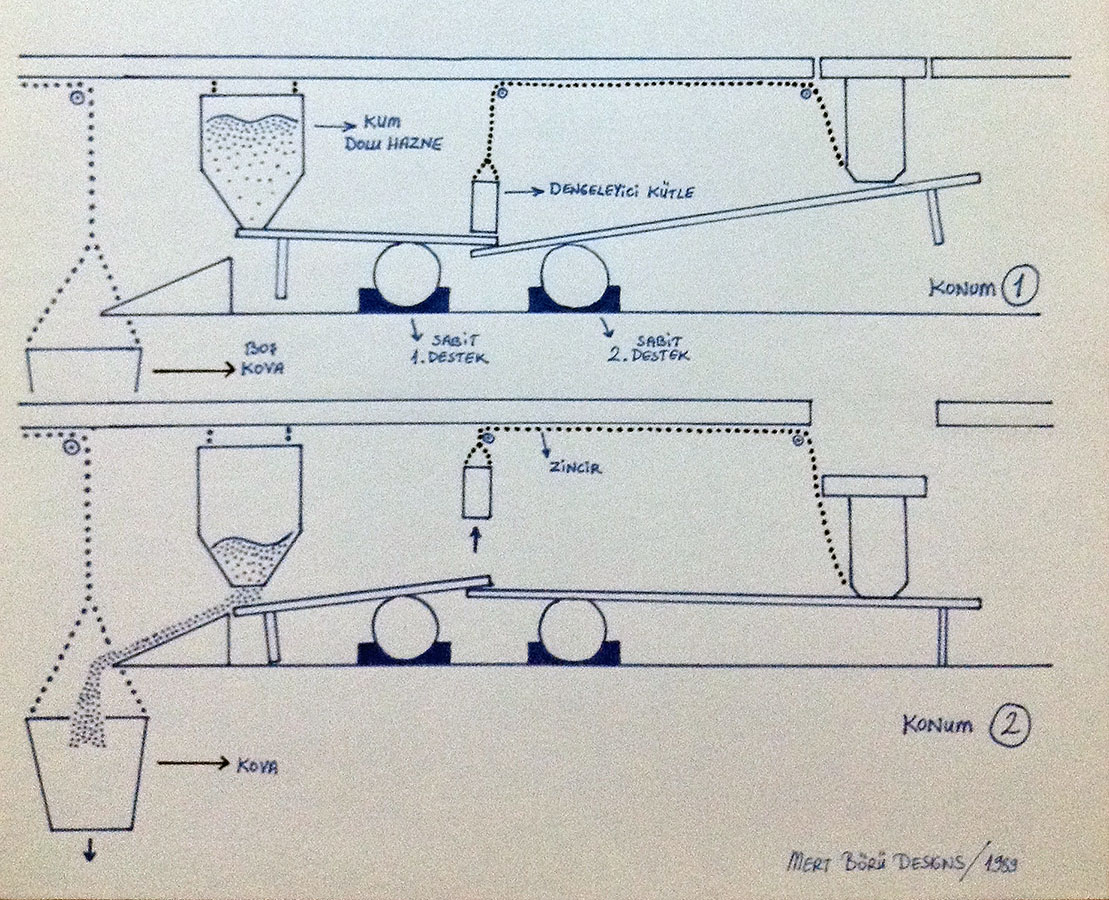

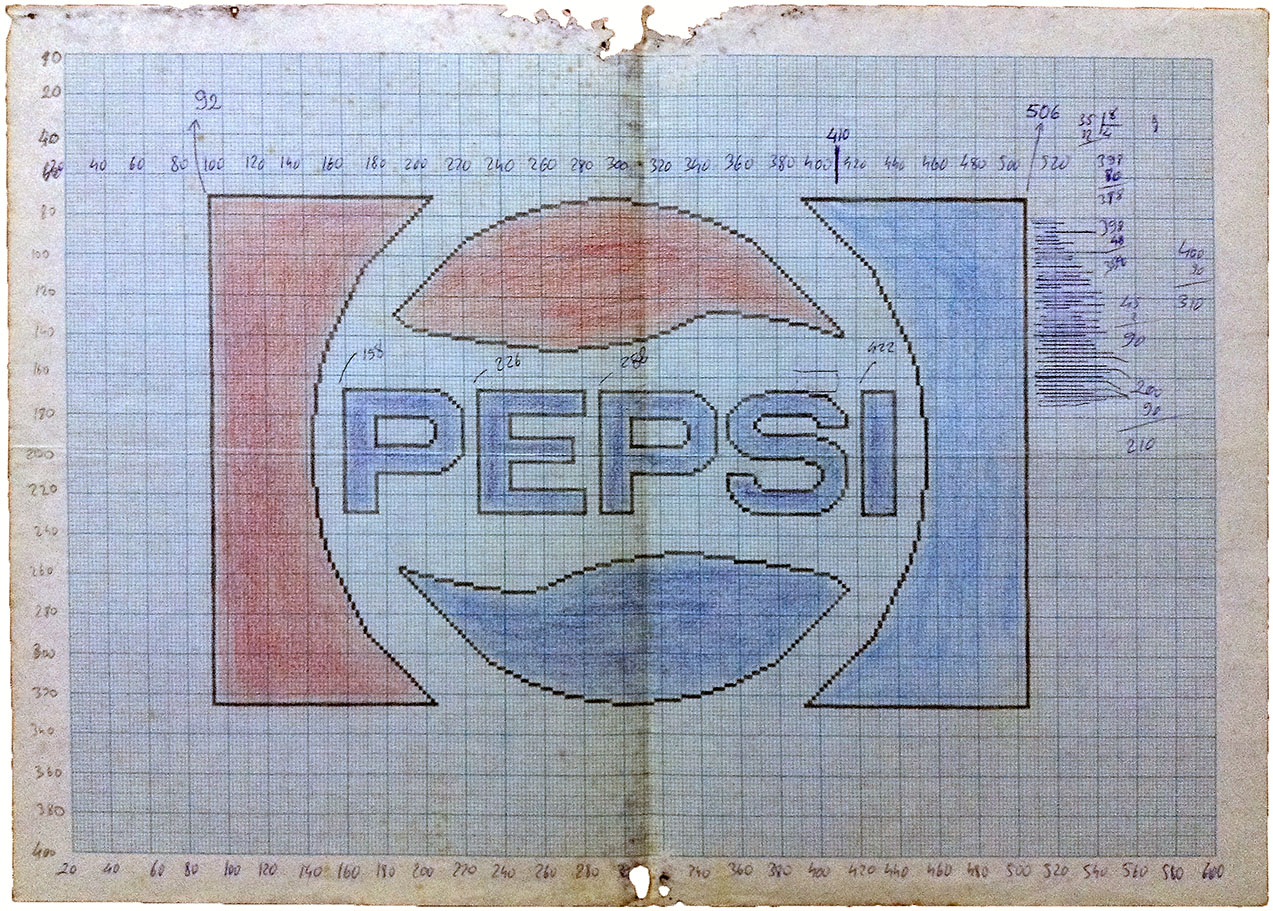

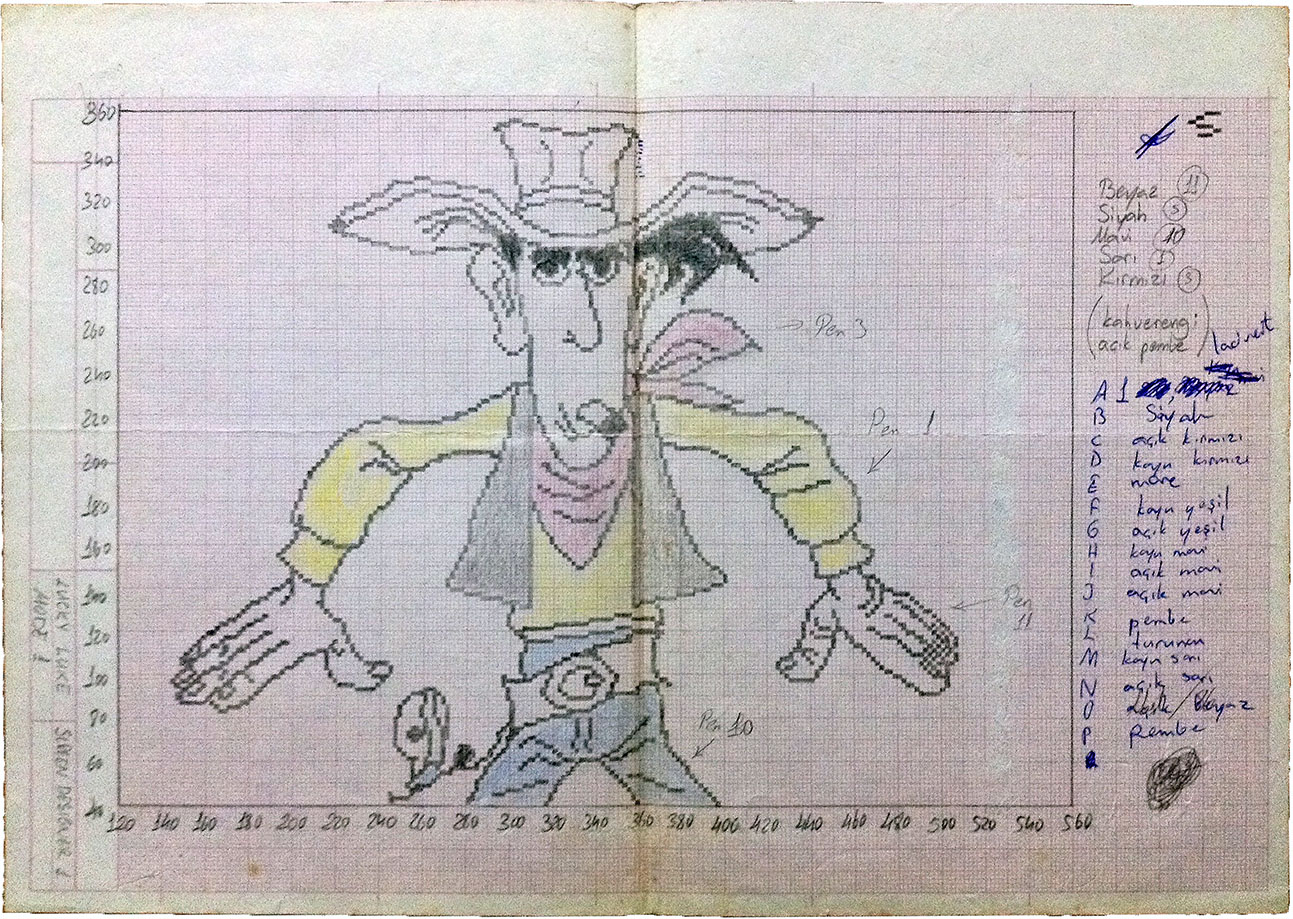

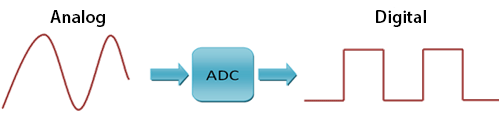

Besides the scientific achievements, camera obscura has a very special meaning to me… In the early 80s, I used to draw illustrations on semi-transparent graph papers, and transfer these images pixel-by-pixel to my Sinclair ZX Spectrum home computer. It was my job. I used to design title/loader screens and various sprites for commercial video games. Drawing illustrations on semi-transparent graph papers was easy. However, as I started copying real photos, I have noticed that scaling from the original image to the output resolution of the graph paper was a tedious process. Before I get completely lost, my dad advised me to use an ancient photography technique, and helped me to build my first camera obscura. It simply worked! In return, my video game development career somehow accelerated thanks to a ‘wooden box’.

(For more details, you can read my article on 8/16-bit video game development era.)

![]()

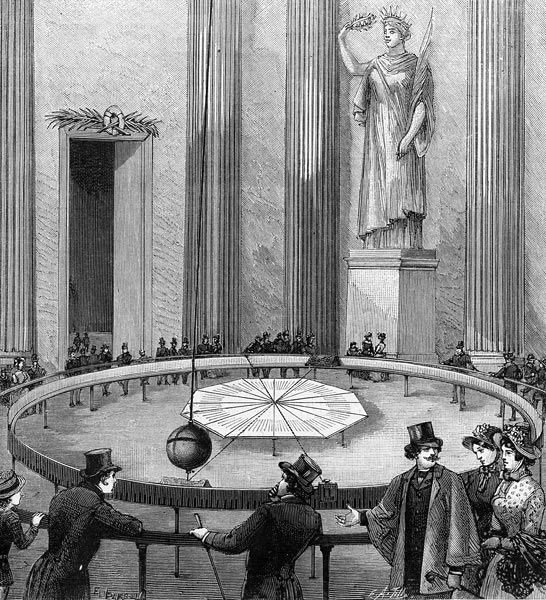

Foucault’s Pendulum

The year was 1600: Giordano Bruno -the link between Copernicus and Galileo– was burned at the stake for heresy when he insisted that the Earth revolved around the Sun. But his theory was soon to become a certainty, and next two-and-a-half centuries were full of excitement for the inquiring mind. On February 3, 1851, Léon Foucault finally proved that our planet is a spinning top!

His demonstration was so beautifully simple and his instrument so modest that it was a fitting tribute to the pioneers of the Renaissance. Even more rudimentary demonstrations had already been attempted, in vain, by throwing heavy objects from a great height, in the hope that the Earth’s rotation would make them land a little to one side. Foucault, having observed that a pendulum’s plane of oscillation is invariable, looked for a way to verify the movement of the Earth in relation to this plane, and to prove it. He attached a bob to the sphere of the pendulum, so that it brushed against a bed of damp sand. He made his first demonstration to his peers, in the Observatory’s Meridian room at the beginning of February, and did it again in March for Prince Bonaparte, under the Pantheon‘s dome. The pendulum he used was 77 meters high, and swung in 16 second periods, thereby demonstrating the movement of the Earth in a single swing.

This experimental system, with the childlike simplicity of its modus operandi, may have been one of the last truly ‘public’ discoveries, before scientific research retreated into closed laboratories, abstruse protocols and jargon. Léon Foucault is said to have given up his medical studies because he couldn’t stand the sight of blood. If he hadn’t done so, no doubt someone else would have proved the rotation of the Earth – but with a far less intriguing device!

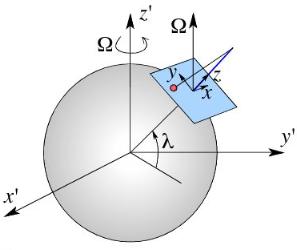

Technically speaking…

In essence, the Foucault Pendulum is a pendulum with a long enough damping rate such that the precession of its plane of oscillations can be observed after typically an hour or more. A whole revolution of the plane of oscillation takes anywhere between a day if it is at the pole, or longer at lower latitudes. At the equator, the plane of oscillation does not rotate at all.

In rotating systems, the two fictitious forces that arise are the Centrifugal and Coriolis forces. The centrifugal cannot be used locally to demonstrate the rotation of the Earth because the ‘vertical’ in every location is defined as the combined gravity and centrifugal forces. Thus, if we wish to demonstrate dynamically that Earth is rotating, we should consider the Coriolis effect. The Coriolis Force responsible for the pendulum’s precession is not a force per se. Instead, it is a fictitious force which arises when we solve physics problems in non-inertial frames of reference, i.e., in coordinate systems which accelerate such that the Law of Inertia (Newton’s first law: F=dp/dt) is not valid anymore.

Fringe science: The Allais anomaly!

The rate of rotation of Foucault’s pendulum is pretty constant at any particular location, but during an experiment in 1954, Maurice Allais -an economist who was awarded the Nobel Prize in Economics in 1988- got a surprise. His experiment lasted for 30 days, and one of those days happened to be the day of a total solar eclipse. Instead of rotating at the usual rate, as it did for the other 29 days, his pendulum turned through an angle of 13.5 degrees within the space of just 14 minutes. This was particularly surprising as the experiment was conducted indoors, away from the sunlight, so there should have been no way the eclipse could affect it! But in 1959, when there was another eclipse, Allais saw exactly the same effect. It came to be known as the ‘Allais effect’, or ‘Allais anomaly’.

The debate over the Allais effect still lingers. Some argue that it isn’t a real effect, some argue that it’s a real effect, but is due to external factors such atmospheric changes of temperature, pressure and humidity which can occur during a total eclipse. Others argue that it’s a real effect, and is due to “new physics”. This latter view has become popular among supporters of alternative gravity models. Allais himself claimed that the effect was the result of new physics, though never proposed a clear mechanism.

“… there is no conventional explanation for this.”

Now, here comes the most interesting part… The Pioneer 10 and 11 space-probes, launched by NASA in the early 1970s, are receding from the sun slightly more slowly than they should be. According to a painstakingly detailed study by the Jet Propulsion Laboratory, the part of NASA responsible for the craft, there is no conventional explanation for this. There may, of course, be no relationship with the Allais effect, but Dr. Chris Duif, a researcher at the Delft University of Technology (Netherlands), points out that the anomalous force felt by both Pioneer probes (which are travelling in opposite directions from the sun) is about the same size as that measured by some gravimeters during solar eclipses. – Creepy!

![]()

TGV 001 prototype

One of the most interesting items exhibited at the museum, at least for me, is the TGV high-speed train prototype that was actually used during the wind tunnel aerodynamic tests in the late 60s. Remarkably rare item!

When Japan introduced the Shinkansen bullet train in 1962, France could not stay behind. High-speed trains had to compete with cars and airplanes, and also reduce the distance between Paris and the rest of the country. In 1966 the research department of the French railways SNCF started the C03 project: a plan for trains -à très grande vitesse- on specially constructed new tracks.

TGV 001 was a high-speed railway train built in France. It was the first TGV prototype which was commissioned in 1969, and developed in the 1970s by GEC-Alsthom and SNCF. Originally, the TGV trains were to be powered by gas turbines. The first prototypes were equipped with helicopter engines of high power and relatively low weight, but after the oil crisis electricity was preferred. Even so, parts of the experimental TGV 001 were used in the final train, which was inaugurated in 1981. Many design elements and the distinct orange livery also remained.

The first TGV service was the beginning of an extensive high-speed network built over the next 25 years. In 1989 the LGV Atlantique opened, running from Paris in the direction of Brittany. The new model raised the speed record to 515 km/h. Later on, the TGV Duplex was introduced, a double-decker train with 45% more capacity. In the 1990s the LGV Rhône-Alpes and LGV Nord were constructed, and in the early 21st century the LGV Est and LGV Méditerranée followed. On the latter, Marseille can be reached from Paris in only 3 hours. The TGV-based Thalys links Paris to Brussels, Amsterdam and Cologne. The Eurostar to London was also derived from the TGV.

Today, there are a number of TGV derivatives serving across Europe with different names, different colours, and different technology. However, some things never change, such as comfort, luxury, and high speed!

![]()

Conclusion

Set in the heart of Paris, the Musée des Arts et Métiers represents a new generation of museums aiming to enrich general knowledge by demonstrating how original objects work in a moving way by reconciling Art and Science. The odd juxtaposition of centuries of monastic simplicity with centuries of technological progress tickles the visitors. Thus, the museum symbolically bridges the illusory divide between technology and spirituality.

What does he see? Is he mistaken?

The church has become a warehouse!

There where tombs once stood

A water basin lies instead;

Here, the blades of a turbine rotate,

There, a hydraulic press is running;

Here, in a high-pressure machine,

Steam sings a new song.

An homage to electromagnetics

Spread widely by the telephone.

And electrical lighting

Chases away the sacred demi-jour;

We then understand that the church

Is now a Musée des Métiers;

Arts et Métiers, here, are worshipped,

Utilitarian minds at least will be satisfied!

August Strindberg, Sleepwalking Nights on Wide-Awake Days – (1883)

References:

![]() The Musée des Arts et Métiers, Guide to the Collections, Serge Chambaud – ©Musée des Arts et Métiers-CNAM, ©Éditions Artlys, Paris, 2014.

The Musée des Arts et Métiers, Guide to the Collections, Serge Chambaud – ©Musée des Arts et Métiers-CNAM, ©Éditions Artlys, Paris, 2014.

![]() The Musée des Arts et Métiers, Beaux Arts magazine, A Collection of Special Issues – ©Collection Beaux Arts, 70, rue Compans, 75019, Paris, 2015.

The Musée des Arts et Métiers, Beaux Arts magazine, A Collection of Special Issues – ©Collection Beaux Arts, 70, rue Compans, 75019, Paris, 2015.

![]() The Musée des Arts et Métiers, Laboratoires de L’Art, Olivier Faron – ©Musée des Arts et Métiers-CNAM, ©Mudam Luxembourg, Musée d’Art Moderne Grand-Duc Jean, ©Éditions Hermann, Paris, 2016.

The Musée des Arts et Métiers, Laboratoires de L’Art, Olivier Faron – ©Musée des Arts et Métiers-CNAM, ©Mudam Luxembourg, Musée d’Art Moderne Grand-Duc Jean, ©Éditions Hermann, Paris, 2016.